The Coach — Building DadOps (Part 1)

My wife delivered our daughter in January 2020. No epidural, no drugs, no complications. In and out in 48 hours.

The hospital bill was $30,000.

Insurance covered $24,000. I owed $6,000 out of pocket. Nobody told me this number until after the fact. I'd budgeted $3,000. I was floored—not just by what I owed, but by the absurdity that delivering a human being naturally costs as much as a new car.

Six years and two daughters later, I watch friends and family go through the same blindside. The same sticker shock. The same scramble to figure out what they actually owe and why. Nothing has changed.

So, I decided to build something. I call it DadOps—a "mission control" for expecting fathers to understand the financial and operational reality of having a baby before the bill arrives.

But before I could build the product, I had to build the team. And that team is made of AI.

The Problem with "Just Ask ChatGPT"

When I started exploring this idea, I did what most people do: I opened a chat window and started typing.

"Help me build a financial planning app for expecting fathers."

The responses were fine. Generic. The kind of advice you'd get from a business school textbook or a blog post titled "10 Steps to Validate Your Startup Idea." Nothing wrong with it, but nothing specific to the problem I was solving.

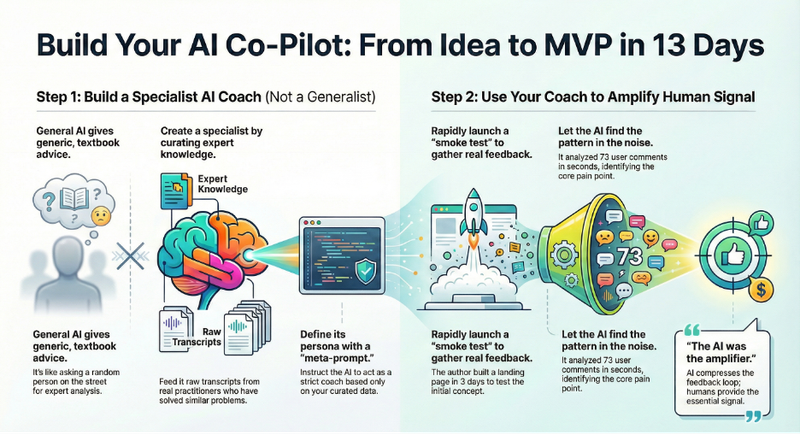

I realized I was treating the AI like a search engine with better grammar. I was asking a generalist to solve a specialist problem.

Here's what I've learned from fifteen years of engineering: when you need expertise, you don't grab someone off the street. You hire someone who's spent years in the domain. They know the failure modes. They know what questions to ask. They know what "good" looks like.

The same principle applies to AI. A general-purpose model is a generalist employee. Useful, but limited. If you want specialist output, you need to create a specialist.

Building the Coach Before Building the Product

Before I wrote a single line of code for DadOps, I built what I call a "SaaS Startup Coach"—a custom GPT configuration designed to think like a founder who's actually shipped products.

The process is straightforward but deliberate:

Step 1: Curate the knowledge base

Over the years, I have curated a short list of resources and people who I follow to gain insight into domains I want to learn about. I leveraged YouTube videos and podcasts from my rolodex of resources who've built and validated SaaS products—people who've been through the smoke test, the MVP, the pivot, the customer discovery calls. I pulled the transcripts. These aren't polished blog posts; they're founders thinking out loud about what actually worked and what didn't.

Step 2: Write the meta-prompt

This is the instruction set that defines the persona. I used the AI to build the AI. I fed the transcript YouTube videos/podcasts into Claude and issued what I call a Meta-Prompt. I didn't ask it to summarize the video. I asked it to analyze the transcript and generate a set of system instructions that would force a custom GPT to act as a coach who embodies a certain philosophy.

The prompt looked something like this:

"Analyze this transcript. Extract the core mental models, the specific frameworks and the step-by-step methodologies. Then, write a system prompt for a Custom GPT. This GPT should act as a strict, experienced SaaS Startup Coach who adheres rigidly to these principles. It should critique my ideas based on this text, not general knowledge. Please let me know if you have any clarifying questions before you generate these instructions."

The output was a detailed "System Character Card." It defined the AI's tone (direct, action-oriented), its constraints (never recommend coding before validation), and its knowledge base.

Step 3: Instantiate across platforms

I created the same coach in both Claude (via Projects) and Gemini (via Gems). This redundancy matters—I'll explain why in the next post.

The result isn't magic. It's a model that's been "onboarded" with domain-specific context. Now, when I log in, I'm not talking to a generic language model. I'm talking to a specialized agent that possesses the specific domain knowledge I curated. When I describe a feature idea, it doesn't just say "sounds good." It asks: "How will you validate the value proposition, that fathers actually want this before you build it? What's your smoke test? What signal are you looking for?"

The Smoke Test

Before I asked the AI to narrow my idea, I tested the broad concept with real humans.

On December 26th, 2025, I committed to actually building something. Over the next three days, I ideated with both AI coaches, acquired the domain name, generated proof-of-concept screens using Stitch (Google's UI generation tool), created a landing page with Claude Code, set up ConvertKit for email capture, and deployed to Vercel.

On December 29th, I posted to r/predaddit and r/NewDads.

The response was immediate. 73 comments. 20 email subscribers. But more valuable than the volume was the content.

Dads weren't responding to "mission control." They were responding to one specific pain point, over and over:

"The one thing my insurance company couldn't tell me after many phone calls with customer service was: 'Is the baby going to have a separate deductible immediately?' Spoiler: that answer ended up being yes."

— u/PotatosDad

"It'd be handy if you could build in a calculator that let pre-dads compare the annual cost + max family out of pocket for each plan option to help determine which plan to pick when expecting, since hitting the max out of pocket is almost guaranteed now."

— u/WhoDatDad813

"I went in just with the assumption that I'd pay my out of pocket max for the years that we delivered and was just pleasantly surprised for everything we didn't have to pay."

— u/tincantincan23

I had conversations with five of the subscribers via Google Meet. Same pattern. The gear lists, the task roadmaps—useful, but not urgent. The insurance math? That was the wound.

The Pattern Extraction

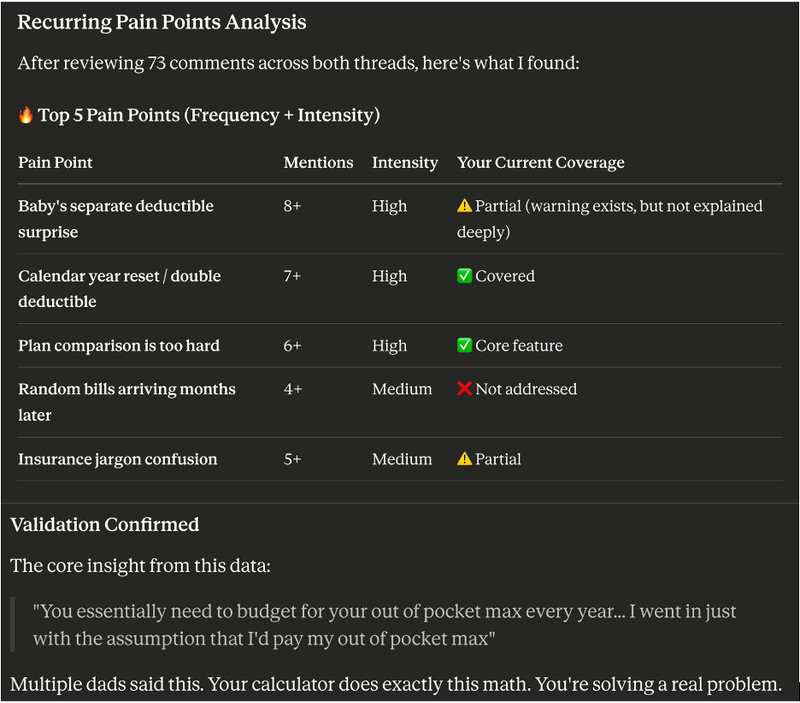

Here's where the AI coach earned its keep.

I extracted all 73 Reddit comments into a CSV. I fed it into both Claude and Gemini simultaneously with a simple prompt:

"I posted about this on Reddit and the attached comments are user feedback. What recurring pain points do you see?"

Both models returned structured analyses within seconds. Here's an excerpt from the actual, unedited output:

The AI didn't generate the insight. The humans did. But the AI compressed hours of pattern-matching into minutes. It surfaced what I might have eventually seen—but faster, and with more confidence that I wasn't just hearing what I wanted to hear.

The Pivot

I'll be honest: narrowing scope wasn't what I wanted.

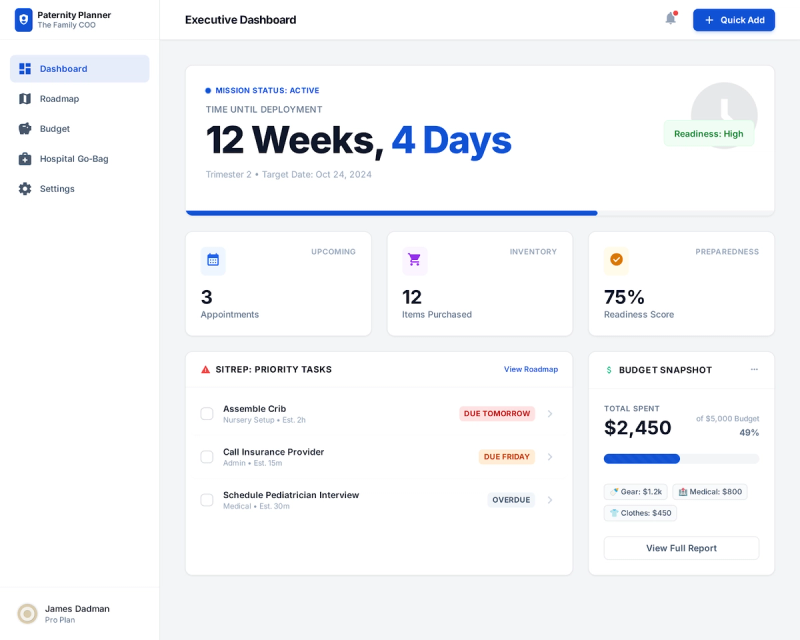

My original vision was a full-blown dashboard—budgeting, task tracking, a deployment roadmap for expecting fathers. The proof-of-concept screens I'd generated showed a beautiful mission control interface. I was attached to that vision.

But the signal was undeniable. Reddit users weren't asking for a dashboard. They were asking for a calculator. Specifically: "compare the annual cost + max family out of pocket for each plan option." That's a direct quote from the comments.

The startup coach had trained me for this moment. One of the core principles baked into its instructions: never build before validation. Start with the smallest thing that tests the riskiest assumption.

So I pivoted. Not to a dashboard. To a single-feature MVP: an insurance plan comparison calculator that flags double-deductible scenarios and shows you which plan actually costs less in a birth year.

On January 8th, 2026—thirteen days after I started—the MVP was live.

I still want to build the full mission control. But now I have a validated entry point, a growing email list, and a product that solves a real problem. The path to the vision is different than I anticipated—but it's grounded in evidence, not assumption.

The Key Insight: AI Amplifies Human Signal

Here's what this process taught me:

AI didn't tell me what to build. Humans did.

What AI did was compress the feedback loop. It helped me move from idea to smoke test in three days. It extracted patterns from 73 comments in seconds. It held me accountable to validation principles when I wanted to skip ahead to building.

The coach didn't replace my thinking—it structured it. The pattern extraction didn't replace reading the comments—it accelerated synthesis. The AI was never the source of insight. It was the amplifier.

This distinction matters. If you expect AI to generate product ideas from nothing, you'll get generic output. If you use AI to process human feedback faster and hold yourself to a disciplined methodology, you'll build things people actually want.

What This Means For You

If you're exploring AI for product development—or any domain-specific work—consider this before your next chat session:

1. Curate domain expertise. Find content from people who've actually done the thing you're trying to do. Transcripts, case studies, post-mortems. Raw signal beats polished advice.

2. Write explicit instructions. Tell the model what role it's playing, what framework it should use, and what behaviors you want. Be specific about what "good" looks like.

3. Create the specialist before asking specialist questions. The upfront investment pays dividends across every conversation.

4. Use AI to process human feedback, not replace it. The signal comes from real users. AI compresses the time between hearing them and acting on what they said.

This isn't about tricking the AI or finding magic prompts. It's about recognizing that models respond to context, and the quality of that context is in your control.

What's Next

In the next post, I'll explain why I run the same ideation through both Claude and Gemini—and what happens when they disagree. Spoiler: the disagreement is the point.

If you want to follow the DadOps journey: dadops.one

This is Part 1 of a 4-part series on AI-accelerated product development. Part 2: "When Claude and Gemini Disagreed."